🎯 Multimodal AI agents: Sensing and responding to the world

Combining vision, audio, and text for richer interactions | Get started with AI | How-to guides and features

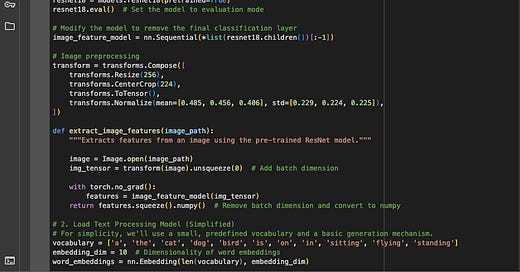

Multimodal AI agents are designed to perceive and interact with the world in a way that is more aligned with human perception by processing information from multiple modalities.

By combining data from sources like vision (cameras), audio (microphones), and text, these agents can achieve a richer understanding of their environment and engage in more nuanced and effective communication.

This post explores the technical foundations of building multimodal AI agents and the critical role of diverse datasets in enabling their capabilities.

This is a practical guide to help decision-makers and board members navigate this evolving landscape.

We must grapple with fundamental questions about the nature of identity, consciousness, and the very essence of human existence.

This is a new sub-series of the Deep Dive series “How to build with AI agents.” which aims to help you proactively address potential issues and empower your IT and support agents with automation tools and AI for faster case resolution and insights.

It follows the series “How to build with AI agents. "This time, we focus on building on your existing foundation and the unique aspects of AI agents.